NOTE: This script was originally developed to compare table row counts, but with the below mentioned modifications this could be helpful if you have a database that is shipped to another server and often times needs to be ETL into the other database and you are worried there may be records that aren't getting transferred properly (or at all).

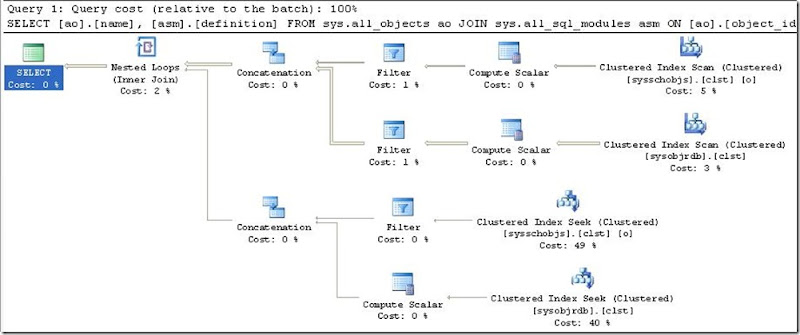

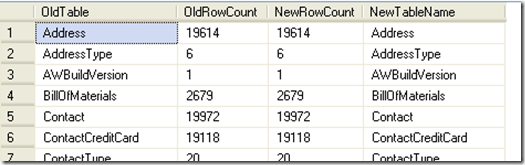

The other day I came across a question on how to compare the tables in one database to the tables in another database, this was in concerns to a migration project. This person had a database that existed on an older server running SQL 2000 and they chose to migrate their database to a new server running SQL Server 2005. They had wanted to be able to somehow be able to visually display a comparison of the two databases to prove the conversion was successful, and also to display the comparisons of the tables. Results meeting above requirements are shown in Figure 1.

Figure 1 (results of running sp_CompareTables_All)

In response to this I had started to develop a TSQL script that created a stored procedure that would allow you to run it from the current database and specify the location of the original database. The script would then collect the tables and count the records within each table for both databases. It will display the table names on the outside (left and right) of the results, and the record counts will be next to each other on the inside (next to their table names); which will allow for a very simple and pleasing visual comparison. I wanted, and did, avoid using cursors or other techniques that will potentially bog down the system resources (such as the stored proc 'sp_MSForEachDB' and 'sp_MSForEachTable').

Now, this is a rough draft that I had thrown together and tested over a lunch period; so, there are some issues that can still be cleaned up on the script and it lacks the ability to detect if a table exists on one database, but not the other.

At the end of the script I'll also provide a quick comment that will allow you to change the results from displaying all tables and their row counts to display only the tables with mismatched row counts (which may be useful if you want to use this script as a method to troubleshoot databases that tend to not migrate all records).

The first problem faced is how to best access the server with the original database; while there are many options I chose to use the built-in stored procedure "sp_addlinkedserver". This procedure seems to be simple to implement and allows for seamless integration into TSQL code. Testing for this script was performed on the AdventureWorks database (developed for SQL 2005) on both test systems. The 'originating' database was on a SQL 2005 instance (although testing was briefly performed on SQL 2000 and SQL 2008 to validate compatibility). The 'originating' server is called TestServer35, the database is on an instance called Dev05; the database for both instances is called AdventureWorks2005. This information becomes important when using the sp_addlinkedserver command. I used the following TSQL code:

EXEC sp_addlinkedserver

@server='TestServer35-5',

@srvproduct='',

@catalog='AdventureWorks2005',

@provider='SQLNCLI',

@datasrc='Server35\Dev05'

As you can see, the linked server is referenced as TestServer35-5. We will use this reference, in a four-part identifier (Server.Catalog.Schema.Table). The next obstacle is to obtain a listing of tables and their row counts. I used a script I had modified last year to perform this since this script will run both on SQL 2005 and SQL 2000 (you can view my script on SQLServerCentral.com's Script section at: http://www.sqlservercentral.com/scripts/Administration/61766/). I then take the results of this and store them into a temporary table; I also do this for the new database (on local server where this stored proc is running at).

Then comes the simple part of joining the two temp tables into a final temp table. I chose this route because I wanted to have the two database in separate temp tables in the event I want to work with that data, which I will be working with the data in my update to determine if a table is missing from one of the databases.

Here is the TSQL code I used (remember if you want to use this you will need to change the linked server information to the correct information; as well as to create this stored proc in the appropriate database):

--Change the database name to the appropriate databse

USE [AdventureWorks2005];

GO

CREATE PROCEDURE sp_CompareTables_all

AS

CREATE TABLE #tblNew

( tblName varchar(50), CountRows int )

INSERT INTO #tblNew

( tblName, CountRows)

SELECT o.name AS "Table Name", i.rowcnt AS "Row Count"

FROM sysobjects o, sysindexes i

WHERE i.id = o.id

AND indid IN(0,1)

AND xtype = 'u'

AND o.name <> 'sysdiagrams'

ORDER BY o.name

CREATE TABLE #tblOLD

( tblName varchar(50), CountRows int )

INSERT INTO #tblOLD

( tblName, CountRows)

SELECT lo.name AS "Table Name", li.rowcnt AS "Row Count"

--********

--Replace TestServer35-5 and AdventureWorks2005 below with your appropriate values

--********

FROM [TestServer35-5].[AdventureWorks2005].[dbo].[sysobjects] lo,

[TestServer35-5].[AdventureWorks2005].[dbo].[sysindexes] li

WHERE li.id = lo.id

AND indid IN(0,1)

AND xtype = 'u'

AND lo.name <> 'sysdiagrams'

ORDER BY lo.name

CREATE TABLE #tblDiff

( OldTable varchar(50), OldRowCount int, NewRowCount int, NewTableName varchar(50))

INSERT INTO #tblDiff

( OldTable, OldRowCount, NewRowCount, NewTableName )

SELECT ol.tblName, ol.CountRows, nw.CountRows, nw.tblName

From #tblNew nw

JOIN #tblOLD ol

ON (ol.tblName = nw.tblName AND ol.CountRows = nw.CountRows)

SELECT * FROM #tblDiff

DROP TABLE #tblNEW

DROP TABLE #tblOLD

DROP TABLE #tblDiff

You simply execute the code with the following TSQL:

EXECUTE sp_CompareTables_All

The results of this script are shown in Figure 1 (above).

Now, this is great if you want to have a list that you can go through yourself to verify each table matches in row counts. But, what if that database has 1000 or more tables? What if you are just, simply put, lazy? Why not utilize SQL Server to process this information for you?

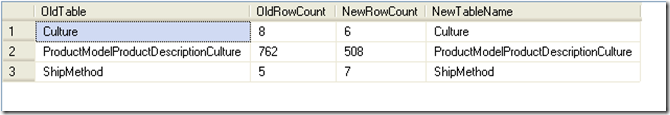

Well, I sure enough did just that. With a very small modification to this script you can easily have it only display any tables that don't match up in record counts.

All you have to do is change the INSERT INTO #tblDiff block's "ON" statement to join if the CountRows are NOT equal. The following is the modified block of code; the remaining stored procedure remains the same:

ON (ol.tblName = nw.tblName AND ol.CountRows <> nw.CountRows)

I did also rename the stored procedure from "sp_CompareTables_All" to "sp_CompareTables_Diff", but this is optional for your own ability to clarify which stored proc is being used.

To get some results I had made a few modifications to the AdventureWorks2005 database. I had added a couple of rows to a table, and removed some rows from two tables. The results of the stored proc showing only the different tables are shown in Figure 2.

Figure 2 (results of running sp_CompareTables_Diff)

As you can see the ability to change this script to show all tables or only different tables is very simple. Even setting up this script is simple, where the hardest part of the whole thing is adding a linked server (which is fairly simple also).

In a future post I'll revisit this script and include the ability to display tables that exist on one database, but not in the other. Be sure to check back for this update.

Until next time...Happy Coding!!